Social Compliance – In Cults Behavior and Political Propaganda

Ah

Kwong

People who live in modern societies desire to have the

freedom of choice, to have the power to control and be the master of their own

behavior. However, people are often unaware that their behavior is strongly

affected by the people around them, guided by social norms, and particular

ideology in their social group, to an extent that they do not fully comprehend.

Social influence theory of Dr. R.

Cialdini

Dr. Cialdini, in his book “Influence – Science and Practice”

elaborates about how societies influence people’s everyday decision making. In

the first chapter “Weapons of Influence”, he uses a dual-model approach—Controlled

vs. Automatic processing-- to describe human information processing.

According to Dr. Cialdini, when people process a piece of information by

carefully analysis, they resort to controlled

processing. On the contrary, when they deal with unfamiliar topics, they

tend to believe in authorities. That is, rather than thinking about an

expert’s argument and being convinced (or not), they often ignore the logical

arguments themselves, and believe in the expert’s expertise. This tendency to

respond mechanically to the information is called automatic

processing.

The automatic processing behavior is prevalent in human action, because

it is the most efficient form of behaving. People in modern society are too busy

to take the time and energy to carefully analyze every bit of information they

encounter. They need shortcuts to deal with complicated modern life, so they

used to use the easier click, whirr --automatic processing approach to process

information. It’s a process of compliance, and a human propensity for automatic, shortcut response.

This automatic compliance processing is efficient and convenient, but sometimes can

be dangerous if the authority or the source of influence is misleading, which is

why Dr. Cialdini calls it a “Weapon”. Cialdini states that “there are some

people who know very well where the weapons of automatic influence lie and who

employ them regularly and expertly to get what they want.” This is also how

commercial advertisement and political propaganda work.

Dual-process of information

processing

Social psychologists who study human cognition, basically divide it into two

approaches — “hot” or “cold”. In other words, there is a dual-process

of cognition (Gilovich & Griffin, 2002; Petty, 2004). With the

“cold” perspective, human’s information process is based on effortful,

reflective thinking, in which no action is taken until its potential

consequences are properly weighed and evaluated. With the “hot” perspective,

human’s behavior and decision making is based on minimal cognitive effort, in

which behavior is often impulsively and unintentionally activated by emotions,

habits, or biological drives.

Petty and Cacioppo(1986)’s study shows that, we either take the central

route to persuasion, that is, an attitude change or not is determined by the

proportion of thought that we generate, which are consistent with, or counter to

the persuasive message. On the other hand, we would take the peripheral

route to persuasion. In this process, “we pay attention to cues that are

irrelevant to the content or quality of the communication, such as the

attractiveness of the communicator or the sheer amount of information

presented”.

Social scientists also make distinction between explicit

cognition and implicit cognition.

Explicit cognition involves deliberate judgments or decisions, of which we are

consciously aware, while implicit cognition involves judgments or decisions that

are under the control of automatically activated evaluations occurring without

our awareness.

To conclude the previous discussion, human’s decision making is a dynamic

process—at best, we take the rational approach at analyzing information before

a decision is made. The piece of information must fit our schema

in order to successfully persuade us. However, in certain situations, people are

just not rational enough, especially when there is a lack of ability, time or

energy, and we turn to the automatic process. The automatic process of compliance most often happens in the

following situations:

·

When people lack the ability to analyze

·

When they feel uncertain about the situation

·

Believe in authority, and

·

To avoid being different from their cultural group.

|

|

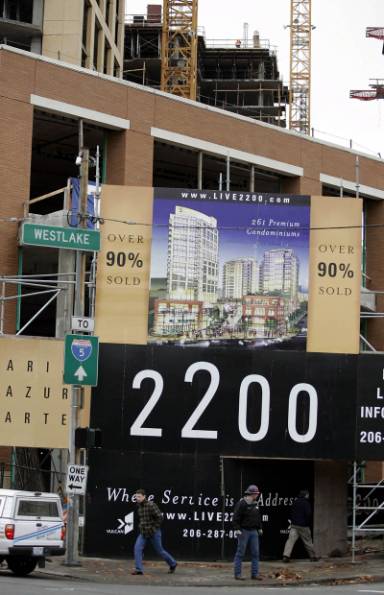

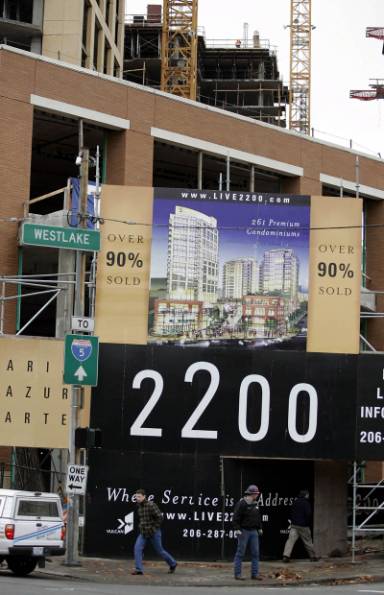

In the

consumer world, people just believe in what an advertisement says. When

people look at a poster or TV advertisement, they mostly do not bother to

analyze it. Like this advisement about the condominiums in Seattle which

is still under construction. By stating,

“OVER 90% SOLD”, it probably makes most people believe these

condominiums are desirable, if they don’t buy one soon, they will miss

their chance.

(AP)

|

Social Proof

In chapter 4 of Dr. Cialdini’s book, he talked about the principle of

“Social Proof”. According to this principle, “we determine what is correct

by finding out what other people think is correct. We view a behavior as correct

in a given situation to the degree that we see others performing it.” For

example, when we see somebody lying on the street, we initially may want to help

him, but by seeing all the other people walk by and do nothing, we probably say

to ourselves, “Oh, may be it is not an emergency, so no one goes to help

him.” We are affected by others, and adapt our behavior, so we won’t

standout from the crowd. We observe others to determine what is the correct

behavior for ourselves. Social pressure is a powerful controlling force.

Doomsday

cult

Dr. Cialdini described two cult cases, which is most interesting to me. One of

them is a doomsday cult based in Chicago in the 1960s. Three scientists, Leon

Festinger, Henry Riecken, and Stanley Schachter—who were then colleagues at

the University of Minnesota, have taken to investigate this cult by joining the

group. They got a first hand perspective from inside the organization. The cult

was led by a middle-aged man and woman, renamed Dr. Thomas Armstrong and Mrs.

Marian Keech, who had a long-held interest in mysticism and flying saucers. Mrs.

Keech claimed that she received messages from spiritual beings living on other

planets, and she transcribed the “message” via “automatic writing”. She

predicted that the world was going to experience a major disaster—a flood that

would engulf the world. She told the cult member (about 30 in all) to give up

everything and prepared to be rescued by flying saucers.

Of course, when the “the night of the flood” came, nothing happened, no

flood, no saucer to rescue them. They felt a deep sense of disappointment and

loss. So what makes the members believe so totally in such an unrealistic tale?

First, the cult member’s level of commitment to the cult’s belief system was

very high. Second, they were isolated from the outside world. They ignored

outside information, even when the “day of disaster approached, many

newspapers, televisions, and radio reporters converged on the group’s

headquarters in the Keech’s house, but the cults member simply ignored them.

Third, they have invested in this belief so much that they gave up their job and

properties, which in turn strengthened their belief system. With the theory of social proof, these people were

facing uncertainty about the future, and were uncertain about whether there

would be a big flood or not, so they tended to believe in the authority of the cult—Mrs. Keech.

They copied the other cult members’ action and belief, because these were the

correct behavior patterns within the group.

Jonestown

The other extreme cult case Dr. Cialdini mentioned

is the infamous Peoples’ Temple and the Jonestown massacre or mass suicide.

The People’s temple was a cult-like religious organization

founded in the 1950's by a monomaniac preacher

Jim Jones located

in Indianapolis.

First, it was named

"Wings of Deliverance" which he later renamed the "People's

Temple Full Gospel Church". He upheld racial equality and social justice in

his church, so it drew a larger number of poor people from the city,

particularly African Americans, but its members also included some educated

middle class white men.

Jones moved his congregation again to his main church in San Francisco in

1971. Facing several scandals and investigations, Jones decided that by creating

an utopian community in Guyana, they could be free from the intervention of US

authorities. At that time, the membership had reached its peak at about 900

people.

On November of 1978, after a shooting incident

following the visit of congressman Leo Ryan, they faced the threat from the US

government and the Guyanese Defense Force. On November 18, claiming that the

government was going to catch them, Jones provided poison—a grape flavord

Kool-Aid laced with Valium and cyanide to his cult followers, provoked a mass

suicide event which killed almost all of the 900 members. Although some of the

followers were forced to take the drink, especially children, and there is

controversy about whether it is a mass suicide or a massacre, some evidence

shows that many of the members took the drug voluntarily, even though they saw

other people collapse after drinking it.

According the Dr. Cialdini, the behavior of the

cult members was an act of compliance. First, the cult members were in a state

of social isolation in the remote jungle of South America, where the conditions

of uncertainty and exclusive similarity would make the principle of social proof

operate especially well, they were willing to follow the example of others. In

Cialdini’s words: “ They hadn’t been hypnotized by Jones; they had been

convinced – partly by him but, more importantly, by the principle of social

proof—That suicide was the correct conduct.”

It is hard to explain how the words of Jim

Jones could lead to such a massive destructive action. What he successfully did

was to train a group of loyal members, a team of “nurses” to work for him,

to guide the behavior of the other cult members. What Jones successfully did was

to first persuade some sizeable proportion of the group members, and in a second

stage, the raw information that a substantial number of group members had been

convinced can by itself, convince the rest.

Other

Compliance Theory

With Cialdini’s social proof theory, he depicted the behavior of cult members

like “Monkey see, monkey do”, like an automatic process that led people to

do illogical things without thinking. However, I am not convinced that human

beings’ behavior can be degraded to that of an animal, because of people’s

ability to process information also involving a “cold” approach. I think

there are other factors, which the theory of social proof does not explain.

The cold perspective psychologist states that people make decisions through

careful mental process. And in the process of persuasion, the massage must fit

in their belief system, consistent with their schema, whether this “belief”

is right or wrong. And it is the “belief” in the cult cultural phenomenon

that has the power to persuade people, or lead them to do unbelievably foolish

things, and finally lead to the process of compliance.

The belief system within a cult is the social norm that guides the behavior of

its members. It is an explicit or implicit system, which tells what is the right

behavior in a group; most of the time, it is internalized. According to

psychologist Herbert Kelman (1961, 1974), internalization

is the adoption of a decision based on the congruence of one’s values with the

values of another.

In additions, cult members tend to see themselves as a union, and they develop a

group identity. Kelman has suggested another mode of social influence, identification, whereby a person is believed to make a decision in

order to maintain a positive self-defining relationship with another person. In

the process of acting as another acts or wants one to act, reinforces one’s

self-esteem.

In the case of the People’s Temple, it was shown that the members have a sense

of group identity or a we-identity,

they had a shared belief that they were a group of “Free, equal and

Justice”, they believed Jone’s Town was a paradise on earth, they took Jim

Jone’s words that drinking the Kool-Aid would lead them to “eternal

peace”. And it is this belief that people were willing to “die” for.

Dr Heidi Riggio’s Commonalities of

Cults may best explain this compliance phenomenon:

1.

Deification of the leader

2.

Deindividuation

3.

Social isolation

4.

Information isolation

5.

Physical manipulation

6.

Daily life control

I think other than these criteria, ideology

control is also common in cult phenomenon. Where people need to have an

upheld belief that fits into their own belief systems, and that draws people’s

minds to make them willing to participate. The ideology system works best with

the use of symbols, ritual, and worship a considered “holy” reading that

state dogma of the group.

When I was as a reporter in Hong Kong about 10 years ago, I investigated a cult,

which was actually controlled by a criminal organization, luring young girls

into prostitution. The cult was named “The Green Dragon” which stated that

they could lead their followers and their family members to go to heaven by

sacrificing their bodies. The “The Green Dragon” attracted a group of young

girls who were lacking family attachments, longing for caring and something

meaningful in their lives. They were persuaded to leave their families, and were

kept together in designated apartments, totally isolated from the outside world.

The cult also had rituals to produce a mysterious atmosphere. They set up an

altar for worship, they also printed out reading material about the afterlife

for the followers to read. These rituals kept the followers’ feeling of

uncertainty, making them believe that there was something supernatural about the

cult.

|

The “Green Dragon” cult performed rituals, to produce a mysterious

atmosphere.

|

An altar of the “Green Dragon” cult for followers to worship.

|

Each girl was convinced to sell her body about 7-10 times a day, with most of

their earning going to the cult. They were so willing to do so, because they

believed so deeply in the cult, and complied without resistance. The cult was

finally cracked down by the Hong Kong Police in 2004. Although the leaders of

the cult were prosecuted, nothing compensated the loss of the girls. This

terribly sad story is another extreme case of how social compliance works.

Political

Propaganda and Social Compliance

Cult phenomenon is the extreme situation in which social compliance occurs.

However, compliance principles are actually very widely used by authorities at

all level. It is particularly widely use by governments, even in an

individualistic society like the United States.

The Bush administration in their effort to seek people’ support for the war in

Iraq, used government propaganda to a great extent that exerts the principle of

social compliance. Their first method was to manipulate mass media. Most

American who get their information from TV news, which is often comprised of 30

seconds sound bits, don’t realize the close relationship between some

television networks and the Bush administration. Fox news is one such example.

The government in an attempt to justify this war tried to connect Iraq with the

9/11 terrorist attack on the World Trade Center in 2001. It was later proved

that no such connection has ever existed. This is an example of propaganda, pure

and simple. I have a friend who told me, at the beginning of the war, he felt

that this was wrong, but he didn’t dare to express his feelings out of fear of

being labeled “unpatriotic”. The “War on Terror” slogan is a powerful

discourse, which made people comply out of fear. So it is not hard to understand

why early in the war, the Bush administration had the support of over 60% of the

American people. This is a vivid example of social compliance.

The method of social compliance is efficiently used in so-called free and

democratic countries. If it is used in a totalitarian regime, it can be more

devastating. China, under the rule of dictator Mao Zedong came out as a

significant example of social compliance, especially in the period of

“Cultural Revolution” from 1966-1969.

China during that period of time was in a state of isolation from the outside

world. The media was used as a tool to control the people. Although we are

talking about a country, the social situation at that time was much like one big

cult. Here are some manifestations of the Cultural Revolution.

Deification of the leader—Mao Zedong was considered as the “Savior” of

China, was considered the “Great Leader”, who led China’s break out from

its “Old World” situation into a “New World”, by changing its economic

system to communism.

Deindividuation—People

at that time were required to wear the same kind of clothing, the gray color

shirt and pants, with men and women dressing the same. Any personal decoration

would be viewed as “capitalist”.

Social

isolation—China at that time was almost totally isolated from the western

world, except the Soviet Union.

Information

isolation—Media was manipulated by the Communist Party, and only spoke for

the Party.

Physical

manipulation – People who were considered “counter-revolutionaries”,

would be humiliated publicly, and often were subject to mental and even physical

punishment.

Daily

life control – “The

people's communes” were

established in every city and rural area, which served to manipulate people’s

daily lives. Collecting

property and crops from citizens and farmers, and distributing food and daily

utilities back to them was called the principle of “give all you have and get what you need”.

Ideology

control— Culture and Fine Arts were also used by the party to control the

population. At that time, Mao’s wife Jiang Qing was responsible for the

“Reform of Fine Arts”. She required that paintings and dramas, etc. should

serve the party and reflect the life of working class positively. In addition,

Mao’s words were being collected and edited in the “Little Red Book”,

which was considered as sacred as the Bible to westerners.

People’s mood at that time was constantly hysterical. Although they were being

deprived of many personal freedoms, they were willing to participate in this

“cult-like” society. Motivated by local authorities, young impressible

students organized to form the “Red Guard” in order to actualize the

communist ideology. The “Red Guard” activities were mostly a voluntarily

movement. After it received an official public “confirmation” and blessing

from Mao Zedong during a gigantic dawn rally on August 18, 1966, it burst out

overwhelmingly over the entire country.

Most young people all over the country took part in the “Red Guard” movement

by imitating the other, to show that they are revolutionary. It is an

actualization of the principle of social proof.

The “Red Guard” movement ended up becoming the most destructive force in the

Cultural Revolution. By upholding the idea of “Destroy the Four Olds”—(old

ideas, old culture, old customs and old habits), the students went about

destroying many cultural treasures. They opposed “old” social institutions,

and refused to attend classes. They forced teachers and university officers on

the street for public humiliation. Personal property was being seized, countless

people who couldn’t take the mental and physical torture often end up

committing suicide. The Red Guard movement went out of control, through the

voluntarily “revolutionary” behavior of the students, misguided by their

obscure revolutionary ideology in that self confined social condition. This is

another extreme manifestation of social compliance.

Conclusion

Social compliance is a prevalent phenomenon in every society. When people are in

a state of uncertainty, they seek to determine what is the correct behavior from

observing and copying other people. On the other hand, people tend to

internalize the value and ideology of a group into their own belief system, in

order to create a group identity, which serve to reinforce the “correct

behavior of the group. It is in the cult phenomenon, where the members are

highly isolated, that the most extreme behavior is to be found. The principle of

social compliance is also widely used by political authorities; when used in the

wrong way, it can be most dangerous and devastating.

---------------------------------------------------------------------------------------------------

Reference:

1.

Robert B. Cialdini, “Influence—Science and Practice” 4th

edition. New York: Longman.

2.

Franzoi, S.L. (2006) Social Psychology (4th Ed.). New York:

McGraw-Hill.

3.

Richard P. Bagozzi; Kyu-Hyun Lee, “Multiple Routes for Social

Influence: The Role of Compliance, Internalization, and Social Identity”

Social Psychology Quarterly, Vol. 65, No. 3 (Sep., 2002), 226-247

4.

“China’s Cultural Revolution, 1966-1969—Not a Dinner Party”

Edited by Michael Schoenhals, New York, Armonk

5.

“China’s Great Proletarian Cultural Revolution—Master Narratives

and Post-Mao Counternarratives” Edited by Woei Lien Chong,

6.

Robert Jay Lifton, “Revolutionary Immortality—Mao Tse-Tung and the

Chinese Cultural Revolution”